When moving ETL processes to a new environment, it’s important to test that those processes return the same results as they do in the old environment. We might expect that, given the same input from source data, running the same code, we should see the same output. Of course that’s not always the case, because the data warehouse is not an abstract model (it’s sometimes easy to forget this when all you see is code and data), but a combination of many varied, interconnected pieces of hardware and software. When we change any one portion it’s hard to predict what, if any, difference this will make – so when we change all of them at once, we need to expect the unexpected.

So how can we tell what’s changed? The SAS Compare procedure is a very handy tool in this situation, providing a quick, automated means of determining differences in type and value between one dataset and another. Here’s an example of how I’ve used it recently in testing migrated data:

%macro compare(table, num, key) ;

%let old = old_&table ;

%let new = new_&table ;

%let result = res_&table ;

%let diff = df_&table ;

proc sort data=&newlib..&table out=&new;

by &key ;

proc sort data=&oldlib..&table out=&old;

by &key ;

run ;

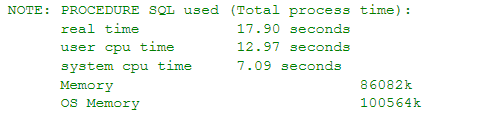

proc compare compare=&new base=&old out=&result

outnoequal outbase outcomp outdiff

outstat=&diff (where=(_type_='NDIF' and _base_ <> 0)) listobs;

id &key ;

run ;

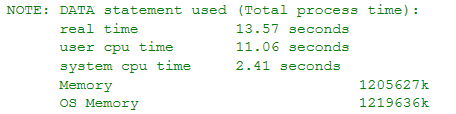

data _diff ;

dsid = open("&new") ;

set &diff end=last;

length column $40 table $40 type $40 fmt $80 ;

table="&table" ;

column = _var_ ;

varnum = varnum(dsid, _var_) ;

type = vartype(dsid, varnum) ;

length = varlen(dsid, varnum) ;

fmt = varfmt(dsid, varnum) ;

if last then rc=close(dsid) ;

run ;

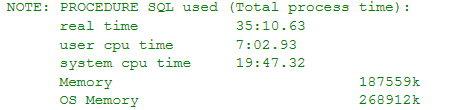

%if &num = 1 %then %do ;

data diff ;

set _diff

(keep=table column varnum type length fmt _base_ _comp_);

run ;

%end ;

%else %do ;

proc append base=diff data=_diff

(keep=table column varnum type length fmt _base_ _comp_);

run ;

%end ;

%mend compare ;

Looking at each dataset in a particular library, the compare macro is run to compare it with its counterpart in the new library (&newlib in the above). There’s a fair bit going on here, so piece by piece, here’s what the macro does.

First, a bit of setup: we are going to be comparing datasets by the supplied &key parameter (rather than by row number), so for the purpose of proc compare we need to sort both sets first. There is of course a new version and an old version of &table, named so that we know which is which. We’re also naming output sets &result as ‘res_&table’ (this will hold rows which differ between versions), and &diff as ‘df_&table’ (this will contain output statistics summarising the comparison).

After sorting the old and new tables, the comparison is run. The old version is supplied as the ‘base=’ argument and the new version is ‘compare=’; the id statement identifies the variable(s) making up the unique key for the sets. The ‘out=’ argument specifies the &result output set, and the modifiers outnoequal, outbase, outcomp and outdiff specify respectively that only observations where there is some difference in values between base and compare, we get the rows from both the base and the compare set, and we also get a row showing the differences for each variable. The ‘outstat=’ argument is set to &diff to hold a set of summary statistics (which we are restricting to results from variables where there is one or more value difference). Finally, the ‘listobs’ option specifies that we get to see a printout of observations where the unique key value is found in only one of the base and compare sets.

In the final part of the macro, we take the &diff set and pull out some metadata about the variables that have tested unequal between sets. All of these &diff sets are appended together to a big output dataset which can be used as a handy summary of differences across the entire library. Note the compare procedure also produces list output which can then be read as a detailed report.