So it has been a very long time since I posted on here, a lot of changes in the meantime. Long story short, I got busy, changed jobs, still busy now, but with the change in scene I have a little more motivation to blog and hopefully some more interesting things to say. I figured I’d start out by posting a quick summary of a technical presentation I gave to the SUNZ quarterly meeting late last year.

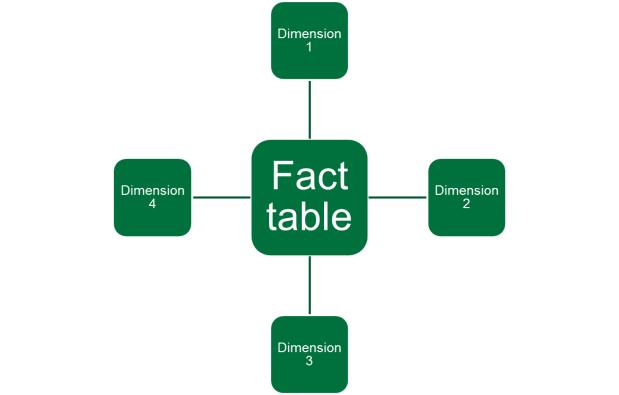

The presentation was a brief look at how to use the SAS data step hash object to replace expensive SQL joins. The scenario I had in mind was a typical one working with a data warehouse, where we join from a large, central fact table to several (typically smaller) dimension tables:

The thing is, even when the dimension tables are (relatively) small, each lookup from the fact extracts a cost, in the form of CPU time – so the more rows in the fact, the more this costs. Not only that, but as the dimensions grow (which they naturally will over time), the CPU time required for each lookup will increase. This is why the quick and easy report which took 5 minutes to run when you wrote it a year ago now keeps you (and perhaps the rest of your reporting batch) waiting for the best part of an hour.

The advantage that the hash object offers is essentially that while growth in the fact still adds to the CPU time required, growth in the dimensions does not. The hash object guarantees (except in situations involving pathological data) constant time lookup. There can be monster savings here, with some caveats which I’ll get to later. For the moment, here’s a very quick how to.

First, the (generic) SQL we’re replacing:

proc sql ; create table report as select dim1.attr1 , dim2.attr2 , dim3.attr3 , dim4.attr4 , fac.measure1 from fact1 fac inner join dimension1 dim1 on fac.dim1_pk = dim1.dim1_pk inner join dimension2 dim2 on fac.dim2_pk = dim2.dim2_pk inner join dimension3 dim3 on fac.dim3_pk = dim3.dim3_pk inner join dimension4 dim4 on fac.dim4_pk = dim4.dim4_pk ; quit ;

The idea with using the data step hash object to replace this is simple: we add a separate hash object for each dimension, containing the keys we are using to join on and the attributes we are adding into the report table. Then for each row in the fact, if we find a match in all dimensions, we add the row into the report.

The code is as follows:

data report ; /* 1 - 'Fake' set statement to add variables into the PDV*/ if 0 then set fact1 (keep = measure1) dimension1 (keep = dim1_pk attr1) dimension2 (keep = dim2_pk attr2) dimension3 (keep = dim3_pk attr3) dimension4 (keep = dim4_pk attr4) ; /* 2 - Declare hash objects for each dimension*/ if _n_ = 1 then do ; declare hash dim1 (dataset:"dimension1") ; dim1.definekey("dim1_pk") ; dim1.definedata("attr1") ; dim1.definedone() ; declare hash dim2 (dataset:"dimension2") ; dim2.definekey("dim2_pk") ; dim2.definedata("attr2") ; dim2.definedone() ; declare hash dim3 (dataset:"dimension3") ; dim3.definekey("dim3_pk") ; dim3.definedata("attr3") ; dim3.definedone() ; declare hash dim4 (dataset:"dimension4") ; dim4.definekey("dim4_pk") ; dim4.definedata("attr4") ; dim4.definedone() ; end ; /* 3 - 'Join' rows to the dimensions by matching with the .find() method*/ do until (eof) ; set fact1 (keep=dim1_pk dim2_pk dim3_pk dim4_pk measure1 end=eof; if dim1.find() = 0 and dim2.find() = 0 and dim3.find() = 0 and dim4.find() = 0 then output ; end ; stop ; drop dim1_pk dim2_pk dim3_pk dim4_pk ; run ;

As per the comments, the code breaks down into 3 steps:

1 – Fake a set statement: the data step compiler does not know about the hash object when it is created, so we need to supply it with column metadata to assist with formation of the PDV.

2 – Declare and create the hash objects. The definekey, definedata and definedone methods do the work of defining the hash object, after which SAS loops over the tables named in the ‘dataset’ parameter supplied with the declare statement. For each row the key and value pairs are added into the hash object.

3 – Perform the join by matching key values from the fact table into the dimension hash objects (using the hash object find() method). This is where one fundamental difference between the two approaches becomes apparent. We’re now not joining tables on disk, as we were with the SQL join; the fact table on disk is being matched with the hash objects, which are data structures entirely resident in memory.

So is it worth it? In a word, yes – but only if you’re willing to trade off a big (sometimes huge) increase in memory consumption against the CPU time you’ll be getting back. To illustrate this, here’s some performance stats from a real-life example.

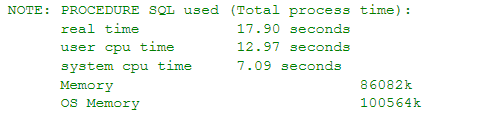

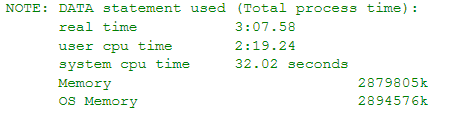

First, a small join – 2 dimensions joined to a small fact table (about 100,000 rows):

Replacing this with data step code using hash objects:

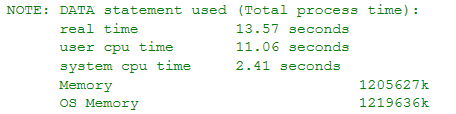

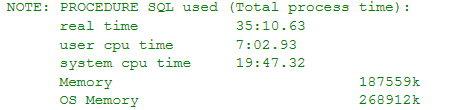

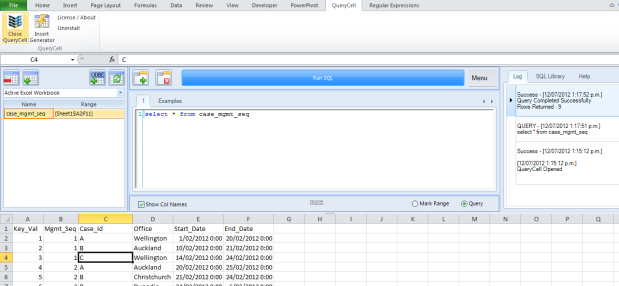

There’s a small saving in CPU time, set against a slight increase in memory consumption. It hardly seems worthwhile replacing this code, but then again it’s not a very long-running report to begin with. The real savings come when we look at the full version – this time, 9 dimensions joined to a somewhat larger fact table (~ 10 million rows). First the SQL join:

Then, the data step version:

Here, replacing the SQL code has reduced the time required by a factor of 10. This is a huge difference and we could be doing backflips and high fives all round right now, but before we kick off the celebrations, take a moment to look at the memory usage.

You’ll see that the memory consumption with the SQL code is less than 300MB, whereas the data step hash code uses a little over 10 times that. In fact, even the data step code against the small fact required over 1GB. The memory usage is linked to the size of the dimensions that you’re shoving into the hash objects, so the decrease in CPU time is being paid for more or less directly with a corresponding increase in memory. So is this a problem? Well, it might be, or it might not be. Obviously it depends on the availability of both these resources – if your server is constantly running out of memory then I’d say yes, it’s a problem. Then again, if your users are always complaining about how long it takes for their reports to run, maybe the hash object is worth a look.

I delivered a slightly longer form of this as a presentation to SUNZ last year. The slideshow is at the link below (pptx) or a pdf version is also available from the SUNZ website.